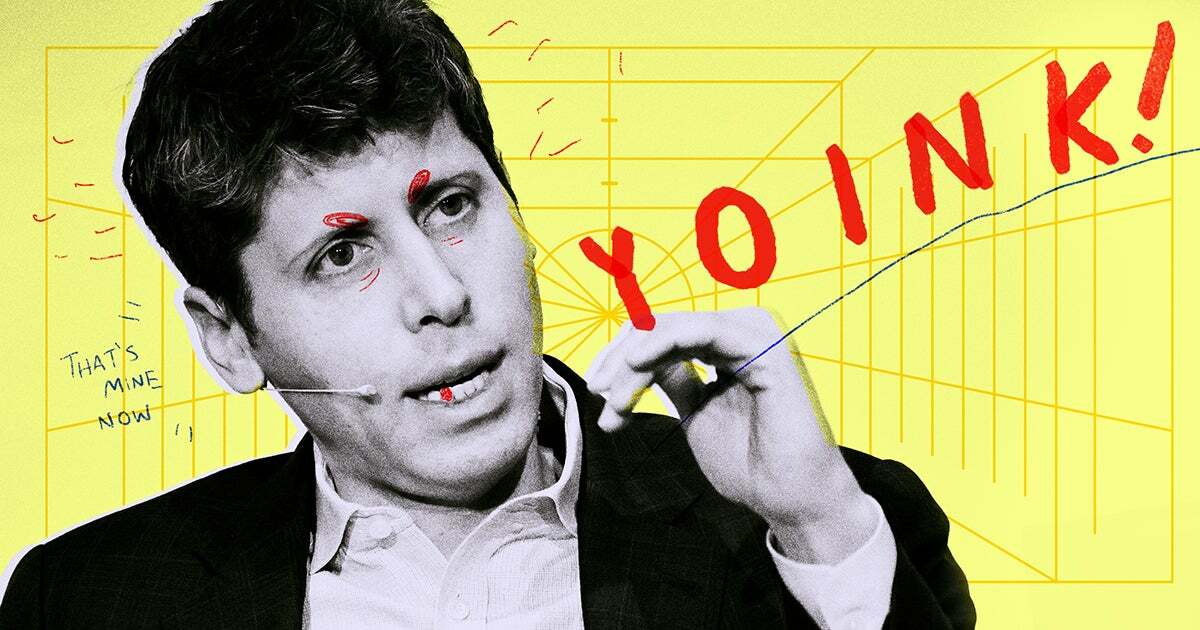

"My core theses — The Rot Economy (that the tech industry has become dominated by growth), The Rot-Com Bubble (that the tech industry has run out of hyper-growth ideas), and that generative AI has created a kind of capitalist death cult where nobody wants to admit that they're not making any money — are far from comfortable.

The ramifications of a tech industry that has become captured by growth are that true innovation is being smothered by people that neither experience nor know how (or want) to fix real problems, and that the products we use every day are being made worse for a profit. These incentives have destroyed value-creation in venture capital and Silicon Valley at large, lionizing those who are able to show great growth metrics rather than creating meaningful products that help human beings.

The ramifications of the end of hyper-growth mean a massive reckoning for the valuations of tech companies, which will lead to tens of thousands of layoffs and a prolonged depression in Silicon Valley, the likes of which we've never seen.

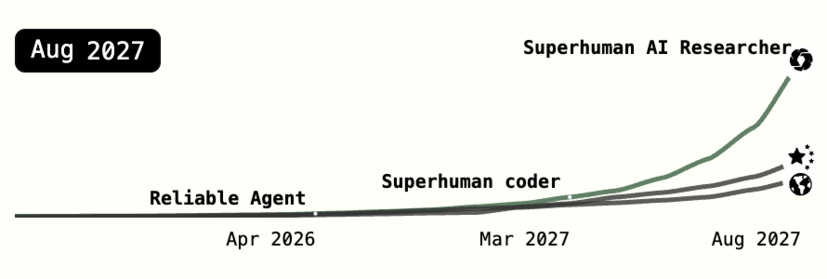

The ramifications of the collapse of generative AI are much, much worse. On top of the fact that the largest tech companies have burned hundreds of billions of dollars to propagate software that doesn't really do anything that resembles what we think artificial intelligence looks like, we're now seeing that every major tech company (and an alarming amount of non-tech companies!) is willing to follow whatever it is that the market agrees is popular, even if the idea itself is flawed.

Generative AI has laid bare exactly how little the markets think about ideas, and how willing the powerful are to try and shove something unprofitable, unsustainable and questionably-useful down people's throats as a means of promoting growth.

(...)

In short, reality can fucking suck, but a true skeptic learns to live in it."

https://www.wheresyoured.at/optimistic-cowardice/