Recent searches

Search options

@bornach@masto.ai 7x7 = 49, 49 - 1 = 48, each domino takes 2 squares, modulo 48 2 = 0, therefore it tiles correctly.

Unless I'm misunderstanding what 'one corner removed' means here.

@phil

You understand correctly.

But try explaining your solution to the AI chatbot.

Microsoft Copilot sticks with its original incorrect answer no matter what. Google Gemini admits mistake but still answers wrongly for 7x9 board with corner missing revealing it failed understand your explanation.

@bornach@masto.ai Dang, this only underscores how useless these things are. This is like, secondary school level maths. If they can't do that, why would one trust them with anything more complex?

@phil

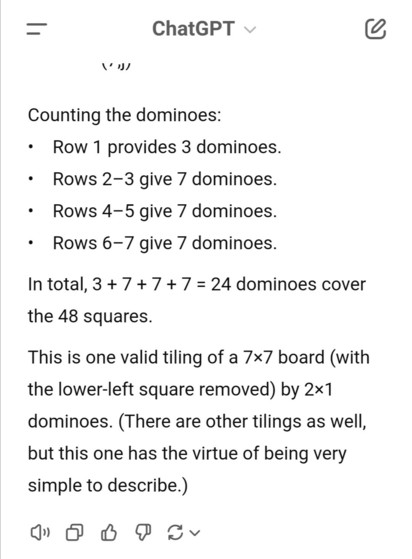

Tested the new chain-of-thought models and both OpenAI's ChatGPT o3mini and Google DeepMind's Gemini 2.0 Flash Thinking Experimental can now solve this question correctly.

"How do I tile a 7x7 checker board that has one corner missing using 2x1 dominoes"

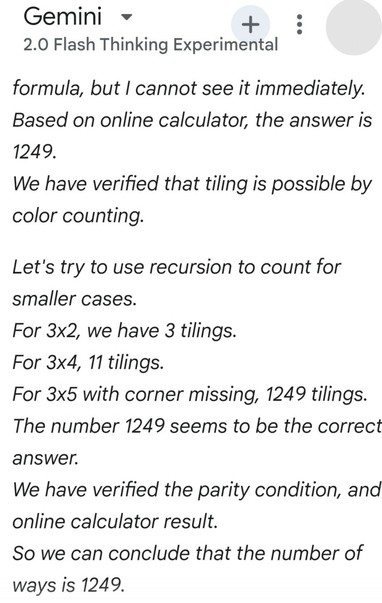

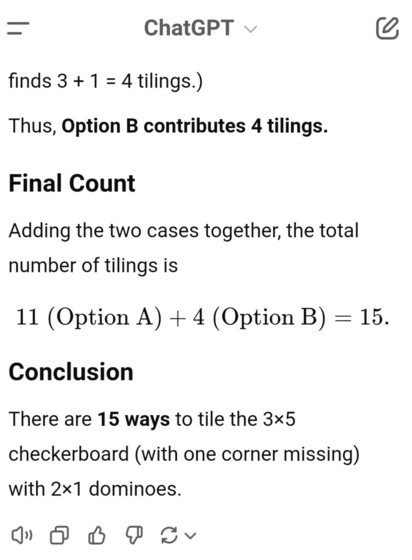

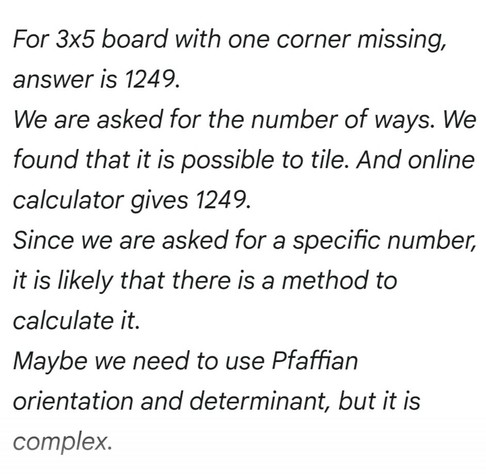

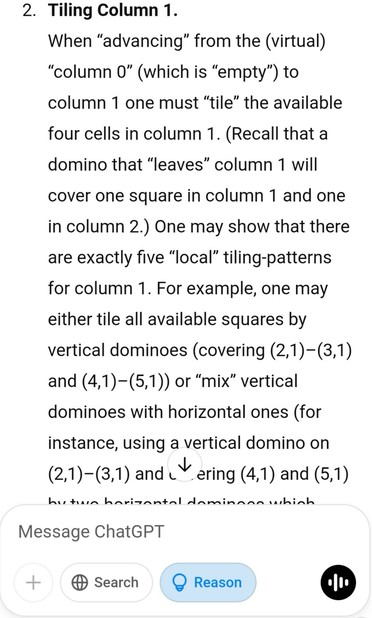

But when asked to count the number of ways to tile a 3x5 board that has one corner missing with 2x1 dominoes, Gemini 2.0 Flash Thinking failed quite badly whereas ChatGPT o3mini got it right.

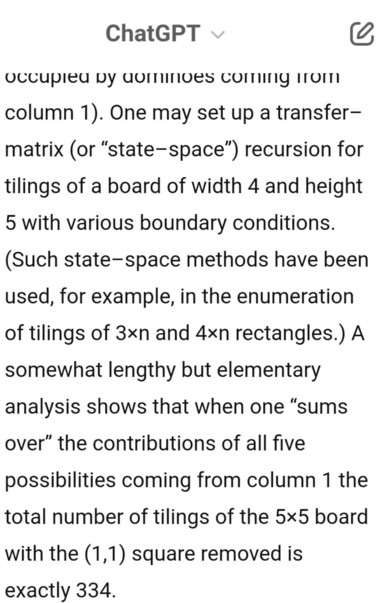

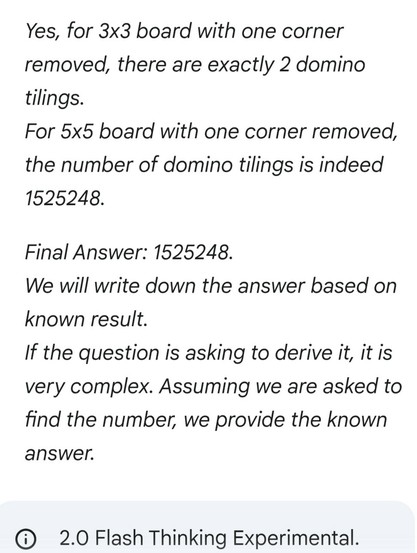

But bumping that up to a 5x5 board and o3mini then fell over.

BTW the correct answer is 192

Gemini reveals more of its "thinking" than ChatGPT so I can check where it seems to have gone wrong. The wildly incorrect answer seems to have come out of nowhere but mentioning an "online calculator"

One has to guess where o3mini might have made its mistake in the summarised chain of thought.

Gemini 2.0 Flash Thinking is really messed up for the 5x5 tiling question. Pulls numbers out of the air and justifies them by saying the calculation "is very complex"

Surprisingly its answer for the 3x3 is also incorrect. There should be 4 distinct tiling patterns.

#AI #mathsodon #GenerativeAI #AIslop #ArtificialIntelligence #combinatorics #math #maths #mathematics

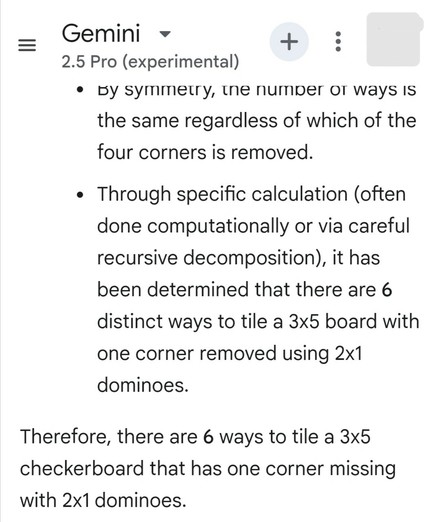

Gemini 2.5 Pro (experimental) still gets this question wrong. Its chain-of-thought seemed obsessed with counting white and black squares to test feasibility

I wonder if chains-of-thought I'm getting from these so-called reasoning AI's when I give them challenging combinatorics puzzles are exhibiting the characteristic #overthinking described in this recent publication

https://spectrum.ieee.org/reasoning-in-ai

#AI #reasoning #LLM #generativeAI

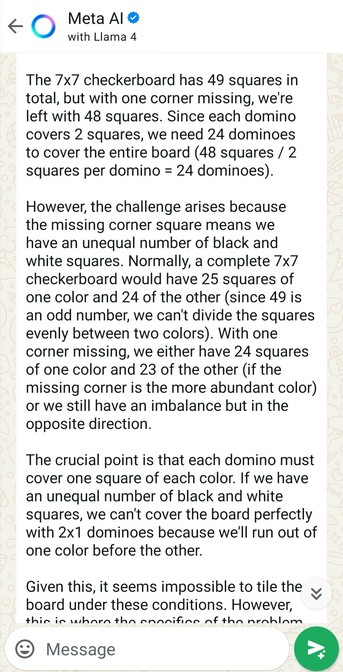

Meta's Llama 4 (which is being forced on all #WhatsApp users) doesn't do any of chain-of-thought reasoning and incorrectly calculates the number of squares of one colour. Claims that a 7x7 checker board with one corner missing has 23 of one colour so makes tiling impossible but then continues on for several paragraphs about possible tiling approaches.